499 error and setting nginx timeout for specific paths on kubernetes ingress

09 Aug, 2023Timeout, but where?!

Some time ago I had a situation, where Kubernetes cluster thrown an unexpected timeout in faces of my clients. Situation a bit awkward, since timeout doesn’t mean nothing good - something is definitely not OK, when your script consumes too much time to be executed, right? I did fast investigation. It turned out that the root cause is hidden in third party vendor’s script which couldn’t be optimized at that time. The only solution was to bump up timeout on nginx, but… on which one?!

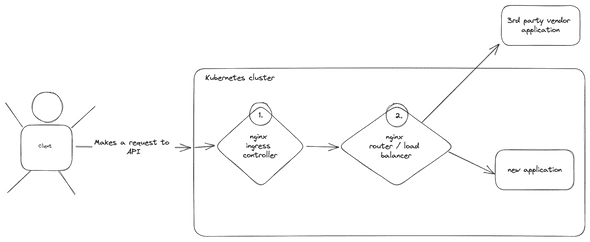

Here is, how the infrastructure looks like in this particular case:

As you may see, we have two hot spots there:

- Ingress Controller to manage traffic inside the cluster (let’s call it “ingress”)

- Nginx which acts as load balancer, to manage between services inside and outside the cluster (let’s call it “load balancer”)

And now, the question is - on which service do we have a problem?! The answer is simple: on both. Ingress is an error reporter which throws 504, but actually the load balancer is the one which throws the most readable error:

The 499 HTTP error 🫣

This is how client sees this situation:

- Makes a request to https://sample.com/time/consuming/script/abc123

- Waits more than default 60 seconds.

- Gets 504 error signed by

nginx. - Reports a bug and wants money back.

How it acts on the server side:

- Client makes a request to https://sample.com/time/consuming/script/abc123

- Ingress Controller receives a request and redirects it to Load Balancer

- Load Balancer recognizes the

time/consuming/script/*points on vendor’s script - Load Balancer redirects the traffic outside the cluster

- 3rd party vendor app (which has no timeout limit on its end) executes script longer than 60s

- Load Balancer cannot wait longer and throws 504

- Ingress Controller receives 504 and throws it back to the client.

It seems like the simplest solution is to bump up timeout limit on Load Balancer and everything should be fine. Not really. When we set it on ~75s, which should be enough for vendor script, Ingress Controller still throws 504, but when you go through the logs, you will see something odd there: 499 HTTP error, thrown on Load Balancer.

499 HTTP error means that client closed request. Wait a moment! How does it happen?! Our client didn’t close the connection! He is waiting there, angry and all-red, but is waiting! Correct. But take a look again on the diagram of infrastructure: Load Balancer’s client is not the client who makes a request. It’s Ingress Controller. So, here is how things are going right now:

- Client makes a request to https://sample.com/time/consuming/script/abc123

- Ingress Controller receives a request and redirects it to Load Balancer

- Load Balancer recognizes the

time/consuming/script/*points on vendor’s script - Load Balancer redirects the traffic outside the cluster

- 3rd party vendor app (which has no timeout limit on its end) executes script longer than 60s

- Load Balancer is waiting because we increased timeouts on nginx

- Load Balancer receives message from Ingress Controller, that it cannot wait longer, so LB throws 499 error and at the same time:

- Ingress Controller throws 504

Gotcha! Ingress Controller is guilty, your honor!

How to fix it?

In general, we don’t want to increase timeout everywhere, on every endpoint we have, since it causes a lot of vulnerabilities in our application and it could lead us to server stability issues or expose us to a DDoS attack. On the other hand, since there is no short-term solution, on how to optimize the vendor script, we cannot leave it as it is. That’s why we will increase a timeout only for specific paths on Ingress Controller.

Note: We can increase timeout for every endpoint we have on Load Balancer, since at the end of the day, Ingress Controller will handle time management for the whole app for us.

Ingress Controller works based on rules we apply. Our main rule looks like this:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: sample-ingress

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

tls:

- hosts:

- sample.com

- www.sample.com

rules:

- host: sample.com

http:

paths:

- backend:

service:

name: load-balancer-nginx

port:

number: 80

path: /

pathType: PrefixIn real life this configuration contains a lot more configuration, but I simplified it as much as possible, to make a point. As you may see - there is no magic. We just manage the traffic by pushing it to the load-balancer-nginx service. By default, nginx ingress controller has timeout set on 60s, which is recommended value. Our goal is to increase it only for /time/consuming/script/* path. The only thing we need to do is to apply new rule for our ingress:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-timeout

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/proxy-connect-timeout: "300"

nginx.ingress.kubernetes.io/proxy-send-timeout: "300"

nginx.ingress.kubernetes.io/proxy-read-timeout: "300"

spec:

tls:

- hosts:

- sample.com

- www.sample.com

rules:

- host: sample.com

http:

paths:

- backend:

service:

name: load-balancer-nginx

port:

number: 80

path: /time/consuming/script/

pathType: PrefixThat’s it! The only thing left is to apply the rule and after few seconds all requests sent to /time/consuming/script/* will have 300s timeout.

The clue is to put it in separate rule, because of annotations section, which are applied to all paths below.

I hope it will help you and solve some problems with unexpected 499 HTTP errors. Take care!